We have attended the XXVIII International Evoked Response Audiometry Study Group (IERASG) Biennial Symposium, in Cologne (Germany) from the 15th to the 19th of September, 2023.

IERASG is a society that provides an open forum to advance global understanding and practice in the field of evoked responses. IERASG meets once every two years at the Biennial Symposium, and is an affiliate member of the International Society of Audiology.

At the IERASG-2023 symposium, we presented two contributions about signal-processing methods that enable deconvolution of overlapping auditory evoked responses, and one additional presentation that involved a live demonstration of a flexible, robust, and inexpensive system for recording auditory evoked potentials.

Contributions

1. Multi-response deconvolution

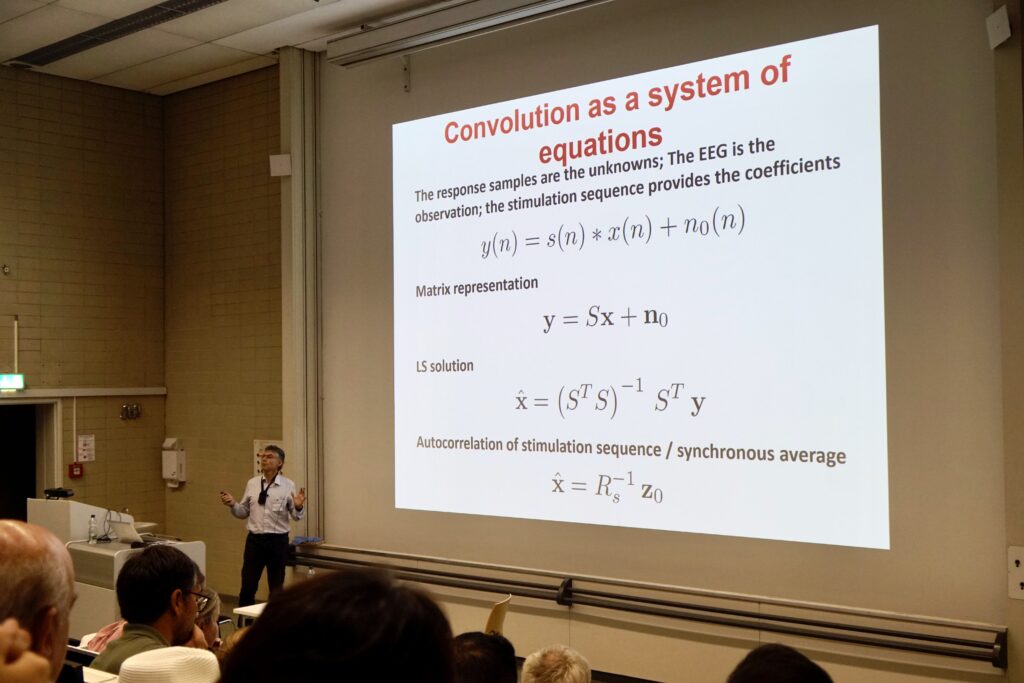

Prof Ángel de la Torre presented the fundamentals of multi-response deconvolution, i.e., a signal-processing algorithm that provides the least-squares estimation of multiple categories of deconvolved auditory evoked potentials.

Importantly, deconvolution can be performed in the reduced representation space defined by ‘latency-dependent filtering and downsampling’ (de la Torre et al., 2020). This reduces processing time significantly, and makes deconvolution feasible when long-duration responses and/or several categories are considered.

Multi-response deconvolution provides researchers and clinicians with a tool to analyse auditory evoked potentials with great flexibility, and holds potential to advance knowledge in hearing neuroscience.

A scientific paper describing this method is currently under review. The abstract of this conference contribution, along with the presentation slides, and text describing the slides are available on the links below.

2. Deconvolution overview

Dr Joaquín T. Valderrama presented an overview of a series of signal-processing algorithms developed by the ‘Signal Processing in Audiology’ research team related to advanced processing of auditory evoked potentials, including ‘matrix deconvolution’ (de la Torre et al., 2019), ‘latency-dependent filtering and downsampling’ (de la Torre et al., 2020), ‘subspace-constrained deconvolution’ (de la Torre et al., 2022), and ‘multi-response deconvolution’ (under review).

The potential value of these methodologies for clinical and research applications was illustrated by several examples, such as the recording of auditory evoked potentials for hearing-threshold estimation, for binaural-hearing assessment, to study non-linear processes like neural adaptation, or to characterise the multiple responses evoked by complex stimuli.

3. Live demonstration

Prof Ángel de la Torre performed a live demonstration of a portable, inexpensive, and robust auditory evoked potentials recording system mostly based on consumer electronics. This session was conducted at the conference room, whereas auditory brainstem and middle latency evoked potentials were simultaneously recorded using clicks at an average rate of 44.4 Hz.

The abstract, presentation slides and text describing the slides are available on the links below. In addition, the “Demo_Nov_2023.zip” is a temporary supplemental file that includes MatLab functions and scripts, binary files, and figures presenting results (available until the 2nd of December, 2023). “Demo_Nov_2023_reduced.zip” is a permanent file in which the binary files and some figures are excluded. Should the temporary file is expired, please contact the authors at atv@ugr.es.

- Abstract

- Presentation slides

- Slides description

- Supplemental file (583 MB): “Demo_Nov_2023.zip” (Available until 02/12/2023)

- Supplemental file reduced (6 MB): “Demo_Nov_2023_reduced.zip” (Binary files and detailed figures excluded)

- Previous demonstration in the 2023 International Workshop on Advances in Audiology (Salamanca, June 2023), which includes a video of the recording session

Social program

We would like to thank the Chair of the conference, Prof Martin Walger (University of Cologne, Germany), the IERASG Chair, Prof Suzanne Purdy (University of Auckland, New Zealand), and the Organising Committee for the excellent organisation of the conference and for the highly entertaining social program, which included a forest hike to reach Dragon´s Rock, a boat trip with dinner, a get-together in a whine cellar, a private visit and concert by the girl´s choir of the Cologne Cathedral, and naturally, a Cologne brewery tour.

Photography credit: Ms. Paula Rieger.